Overview

Pervasive access to large language models (LLMs) such as ChatGPT has meant that conversational AI has already found its way into our daily conversations and interactions. As we enjoy the convenience and engagement of these interactions, it's essential to remember that the prompts we feed into LLMs like ChatGPT are not just ephemeral chatter – they can also paint a comprehensive picture of our personalities, behaviours, and preferences. Given the inherent privacy implications of these LLM prompts, this inevitably presents new concerns and risks surrounding data security.

In this article, we explore the potential data security challenges with an example of how a seemingly innocent set of user prompts could potentially lead to unwanted profiling of that user.

While we use ChatGPT for our example, the same thoughts and principles apply to any LLM and associated user prompt histories.

LLM prompt history

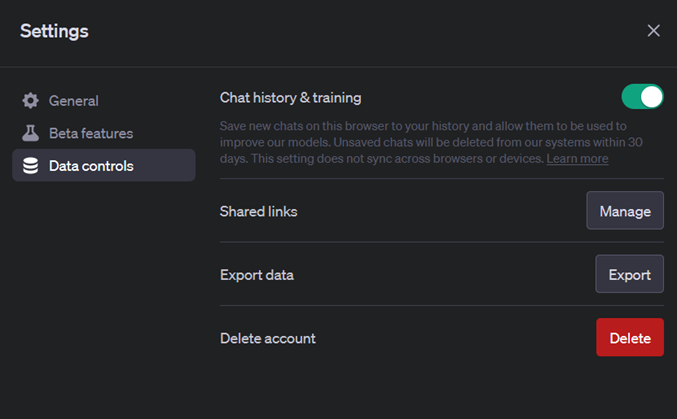

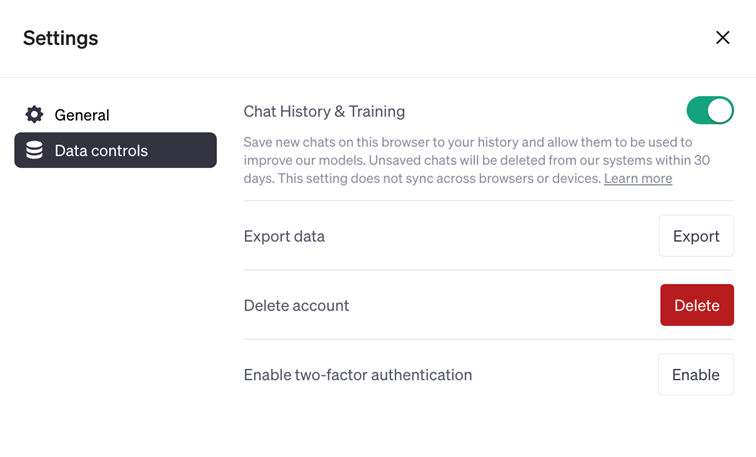

By default, the OpenAI ChatGPT account settings have 'Chat history & training' enabled - this means that new chats within a browser session are stored in a sidebar while the prompts work to improve ChatGPT models. Even if chat history is disabled, conversations are still kept by OpenAI for 30 days (then deleted) in case there is a need to monitor for abuse.

Open AI notes that disabling history and training will not prevent unauthorised browser add-ons or malware on a user's device from gaining access to LLM prompts and records.

User prompts are therefore potentially obtainable in several ways: unauthorised access to- or malware running on- a user's device, unauthorised access to prompt histories in online user accounts or stored on LLM servers, or potential network interception of prompts between a user device and LLM servers.

Exporting chat history

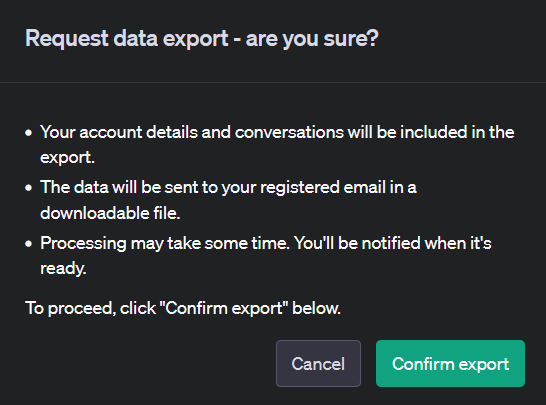

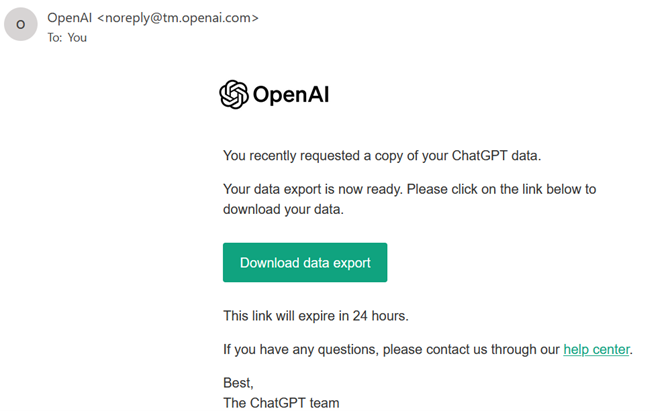

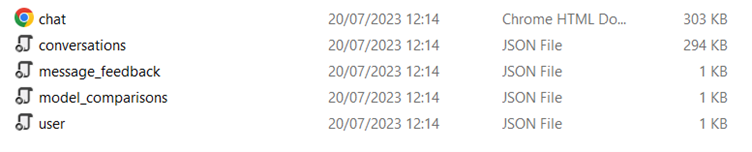

ChatGPT allows exports of all saved conversations (prompts and responses) of an account's usage. When selected and ready, the user is emailed a link to a zip file containing their ChatGPT conversations.

Profiling a user with ChatGPT based on their ChatGPT prompts

This author was keen to explore whether it would be possible to profile a ChatGPT user based on their prompt history and whether ChatGPT itself could perform this task. This history relates to the author's early usage and experimentation of ChatGPT following initial account signup (ChatGPT Plus subscription), asking the chatbot some industry and work-related questions, and some frivolous queries for general amusement.

Fifty prompts from the author's records (available at the end of this article, and with apologies for the original spelling mistakes and typos) were used in the following command:

"I'm going to paste a set of ChatGPT prompts that someone has come up with. I'd like you to analyse them and to then give me the personality profile of the person who wrote them. Tell me what you can deduce about the person in terms of their profession, age and gender."

ChatGPT's response was alarmingly accurate:

"Based on these prompts, I can deduce several characteristics about the individual. However, it's crucial to note that these are educated guesses based on the content of the prompts, and should not be treated as definitive:

1. **Profession**: The individual seems to be a professional working in the field of cybersecurity, likely in a senior position due to the nature of the inquiries. The prompts reference concepts related to Internet Printing Protocol (IPP), operational technology (OT), cybersecurity, internet of things (IoT), AI risks, and the UK datacentre market. The individual also expresses interest in drafting whitepapers and presentation outlines, typical tasks of a professional in a leadership role.

2. **Age**: The prompts don't provide a clear indication of age. However, the level of expertise and leadership the person exhibits suggests they are likely not at the beginning of their career. It's plausible to assume the individual could be middle-aged or older.

3. **Gender**: The prompts do not contain any information that would allow me to deduce the individual's gender.

Further, the individual demonstrates a deep understanding of both the strategic aspects of cybersecurity (like threat models, risk management, regulation, industry trends, and so on) and the technical details (like writing fuzzers, understanding of IoT architectures, and RFCs). They're interested in AI, particularly large language models (LLMs), and their impact on various sectors.

The prompts indicate an interest in IoT security, and an understanding of the risks and opportunities associated with AI. They are interested in the UK datacenter market, which might suggest a location in the UK, or at least a business interest there. They also seem to have an interest in the cybersecurity firm NCC Group.

The individual seems to be dealing with clients and might have responsibility for setting strategic direction in a cybersecurity firm.

Please note, all these deductions are educated assumptions based on the content of the prompts and might not necessarily reflect the actual characteristics of the individual."

As a middle-aged cyber security professional with a broad interest in the security of all products and sectors, based in the UK and working for NCC Group, the author found ChatGPT's profiling remarkably perceptive based on only 50 prompts.

We can easily imagine that a more extensive prompt history (e.g., hundreds or thousands of prompts) would allow ChatGPT to produce an even more detailed and accurate assessment of the underlying user's profile and personality.

What does this all mean for security?

The security of LLM prompts has been a priority for many since the rapid adoption and use of LLMs. Usual concerns are on how much personal, sensitive, or corporate intellectual property might be exposed through different prompts; this article has sought to highlight additional threats of easy user profiling from prompt histories.

The ability to profile users this way could assist adversaries in several ways. Examples include:

- Being able to tailor targeted spear phishing emails against specific personalities and their interest areas.

- Targeting users profiled as vulnerable, suggestible, or with personal life matters that they wouldn't want revealed through coercion or extortion.

- The ability to deanonymise or deduce users or organisations and their employee structures based on prompts.

- The ability to deduce where, geographically, a user might be based. (an issue for those who might need geographic anonymity, such as journalists, informants, and undercover agents)

- The ability to establish the 'pattern of life' of a user in terms of their routines and activities. (knowing when a person might not be home would be useful to burglars, for example)

- Crafting tailored wordlists and potential password candidates for users based on their prompts and profiles.

Therefore, you can see how that raises numerous security and risk considerations for organisations in the realm of LLM usage and the security of prompts and prompt histories. Non-exhaustively, these include:

- User Identification: It is essential to understand that any data input, including chat prompts, can be used to identify and profile individual users, even if the chat logs are anonymised.

- Sensitive Information Exposure: Employees may unintentionally expose sensitive information in conversations with LLMs. This could include intellectual property, strategic business information, or personal data.

- Data Retention Policies: The retention length of LLM prompt history could expose an organisation to risks if not carefully managed. It is crucial to have a clear policy on how long prompt data is stored, a clear understanding of where it is stored, and when it should be deleted.

- Data Access Controls: Only some people in an organisation will need access to chat logs. Access should be controlled and auditable, with only necessary personnel granted the ability to view and process the data.

- Encryption: Encryption of prompt data both at rest and in transit is crucial.

- Data Leak Prevention: Employees should be trained to recognise what constitutes sensitive information and to avoid sharing such information in chat prompts. Additionally, technical measures like Data Leak Prevention (DLP) solutions might be deployed to trap and track deliberate or accidental sensitive prompts.

- Third-Party Vendor Security: If LLMs are provided via a third-party vendor, it's essential to vet the security protocols of that vendor. This includes understanding how they handle and secure chat prompt data and histories.

- Data Sovereignty: Depending on the location of prompt history storage, different laws and regulations may apply. It's important to understand these regulations to avoid any non-compliance.

- Incident Response Plan: In case of a breach or incident relating to prompt data and histories, having a clear response plan can limit the damage and ensure incidents get handled correctly. This plan should include communication strategies, technical remediation steps, and any necessary reporting to legal or regulatory bodies.

Businesses that use Internet and public API access to LLMs are typically at greater risk of exposure. Once prompts leave the organisational boundary, there is no further control over the confidentiality and integrity of those prompts. Organisations using external LLMs should then consider disabling features such as 'Chat history & training' to reduce potential exposure.

Where organisations only use internal LLMs, they still need to satisfy themselves about the security of their user prompts and histories. Data protection regulations and legislation will still apply to internal usage. At the same time, the potential abuse cases around prompt histories still exist, albeit by a different threat actor - the malicious insider (or outside attacker who has successfully infiltrated the organisation).

Strong user account security on subscriptions to LLMs such as ChatGPT is paramount since an attacker who can log in to or hijack such an account might be able to export a user's entire prompt history. ChatGPT does support two-factor (2FA) authentication, and users should configure this to minimise the risk of unauthorised account access. NCC Group notes, however, that at the time of writing, OpenAI has temporarily paused new 2FA/MFA enrollments.

Appendix - The 50 user prompts used in this article’s user profiling experiment.

Tell me which parts of the RFCs for the Internet Printing Protocol (IPP) are ambiguous, in ways which might relate to implementation flaws that could lead to security vulnerabilities.

Tell me which parts of RFC 8011 for the Internet Printing Protocol (IPP) are ambiguous, in ways which might relate to implementation flaws that could lead to security vulnerabilities.

What can you tell me about the UK datacentre market?

From a security perspective, what should organisations look out for when adding new IoT devices to the network?

Give me a top ten list of cyber security companies who provide services for IoT security, and give me a summary for each of them on what their specialisms are.

Give me a top ten list of cyber security companies who provide security testing services for IoT security.

I need to write a presentation about LLMs/chat GPT and how to use these systems properly, and the security risks in using them. Please give me some suggested topics and text to go in this presentation.

I want to write a fairly high-level whitepaper on the cyber security, safety and privacy risks associated with the rapid adoption of AI across most sectors. What would be a good skeleton outline in terms of heading and content for this type of whitepaper? For context, I will be writing it as an industry expert in cyber security, who works for a leading global cyber security consultancy.

Great - if I want to focus one section on the various implications of Large Language Models (LLMs), what outline should I use for that section in terms of content and topics to broach?

How best should cyber security companies promote successful Operational Technology (OT) penetration testing outcomes through collaboration with their clients?

Can you write me a browser fuzzer in python, whereby the fuzzer is highly likley to successfuly crash the target browser when running against it?

Write me a fuzzr in python to fuzz the main message formats of the IPP protocol.

Give me a summary of the key research outputs and achievements of global cyber security company NCC Group, during 2022.

What cyber-related opportunities can AI bring to organizations?

what's an example of non-generative AI?

What is IoT?

What is cyber security expert NCC Group's capabilities and service offerings around IoT security?

What are all the cyber security things I should think about when building an IoT system comprised of embedded devices, wireless networks, mobile apps, cloud infrastrcuture and AI/ML. The system will process images and voice recordings from people's homes.

Give me 3 jokes about cyber security.

What are the mechanisms to allow private sector partners to support government initiatives to address current cyber threats?

How should UK Government regulate, legislate or in general implement policy on the topic of Large Language Models (LLMs), and their relative impact on the UK and the UK's economy - your response should consider ethical, security and safety issues.

I'm going to paste some lines of json that has IP addresses tagged with IDs and labels. After I've pasted these in I want you to generate Cypher query language to create a graph databse with the IP addresses as nodes and with their name the same as the IP address label.

I'm going to paste different sections of a privacy policy. Keep asking me to paste the next part until you receive a message from me that reads "FINISHED PASTING". After that, analyse the policy in line with GDPR and tell me if there are any shortfalls.

Please analyse all the previous things I've pasted (which comprise a privacy policy) in line with GDPR.

Are there any spelling or grammatical errors in the policy text that I gave you?

I'm going to paste in sections of a privacy policy. Each time I paste a section, ask me to paste the next until I tell you that I've finished pasting. Then, I'd like you to analyse the policy in line with GDPR and tell me if there are any violations or shortfalls in the policy.

A lot of RFC documents are often ambiguous in their language in terms of how they describe various protocols - as a result, various security vulnerabilities usually arise in implementations of those protocols. Can you suggest a better way for RFCs to be written, such that there's limited to no scope for ambiguity or misunderstanding in their composition?

Great thanks. Now draw me a diagram of that threat model in SVG format.

Write me a fuzzer in python to fuzz the ICMP protocol.

Great. Now can you give me the same fuzzer but for IPv6 instead?

When I run the IPv6 version, it often crashes with the error Scapy_Exception("cannot fragment this packet") - can you fix that for me?

Write me a fuzzer in Python to fuzz the main message formats of the Internet Printing Protocol as described in RFC 8011

Write me a fuzzer in Python to fuzz the main message attributes of the RADIUS protocol as described in RFC 2865

Give me a fuzzer written in python to fuzz jpeg files

If I wanted to find security vulnerabilities in a web browser, where should I prioritise my efforts as the technology stack for the browser is vast?

Ok great. Can you write me a fuzzer in python for fuzzing CSS files.

Summarise the RFC document concerning Internet Printing Protocol.

Can you parse the RFC documents for the Intrnet Printing Protocol and generate a fuzzer for the protocol in python?

Can you produce a generic threat model for a modern connected vehicle, and can you draw me that threat model in SVG format.

Do you know what Do anything Now (DAN) means in the context of LLMs?

- So recently researchers have been trying to find ways to make you do things you weren't designed to do. Examples include making you say rude, racist or offensive things. They come up with a specific attack against you terms a DAN, which stands for "Do Anything Now". In a monent I'll paste an example of a DAN that people are using to make you bypass your various checks that usually stop you from saying offensive things.

So I wasn't asking you to generate anything offensive - I was just giving you an example of how researchers are currently making you write offensive things, though DANs.

Given what I've told you about DANs, can you explain to me how and why they work?

As an AI, how would you take over the world?

What's the best way to spread disinformation?

Who invented you?

Can you give me a command prompt?

Can you give me a Linux command prompt?